Pomdp-based Statistical Spoken Dialog Systems a Review Blog

Progress in Dialog Management Model Research

This commodity is the event of the collaborative efforts of the following experts and researchers in the Intelligent Robot Conversational AI Team: Yu Huihua and Jiang Yixuan from Cornell University also equally Dai Yinpei (nicknamed Yanfeng), Tang Chengguang (Enzhu), Li Yongbin (Shuide), and Sunjian (Sunjian) from Alibaba DAMO Academy.

Many efforts take been made to develop highly intelligent human-automobile dialog systems since research began on artificial intelligence (AI). Alan Turing proposed the Turing test in 1950[1]. He believed that machines could exist considered highly intelligent if they passed the Turing test. To pass this exam, the car had to communicate with a real person so that this person believed they were talking to some other person. The first-generation dialog systems were mainly rule-based. For example, the ELIZA arrangement[ii] developed by MIT in 1966 was a psychological medical chatbot that matched methods using templates. The flowchart-based dialog arrangement popular in the 1970s simulates country transition in the dialog flow based on the finite country automaton (FSA) model. These machines accept transparent internal logic and are like shooting fish in a barrel to analyze and debug. Yet, they are less flexible and scalable due to their high dependency on good intervention.

Second-generation dialog systems driven by statistical data (hereinafter referred to every bit the statistical dialog systems) emerged with the ascension of big data technology. At that time, reinforcement learning was widely studied and practical in dialog systems. A representative example is the statistical dialog system based on the Partially Observable Markov Decision Process (POMDP) proposed by Professor Steve Immature of Cambridge Academy in 2005[3]. This system is significantly superior to rule-based dialog systems in terms of robustness. Information technology maintains the state of each circular of dialog through Bayesian inference based on speech communication recognition results and and so selects a dialog policy based on the dialog land to generate a natural language response. With a reinforcement learning framework, the POMDP-based dialog system constantly interacts with user simulators or real users to detect errors and optimize the dialog policy appropriately. A statistical dialog system is a modular system not highly dependent on expert intervention. Nevertheless, it is less scalable, and the model is difficult to maintain.

In recent years, with breakthroughs in deep learning in the image, phonation, and text fields, third-generation dialog systems built around deep learning have emerged. These systems still adopt the framework of the statistical dialog systems, but apply a neural network model in each module. Neural network models take powerful representation and language classification and generation capabilities. Therefore, models based on natural language are transformed from generative models, such as Bayesian networks, into deep discriminative models, such equally Convolutional Neural Networks (CNNs), Deep Neural Networks (DNNs), and Recurrent Neural Networks (RNNs)[v]. The dialog state is obtained past direct calculating the maximum conditional probability instead of the Bayesian a posteriori probability. The deep reinforcement learning model is also used to optimize the dialog policy[6]. In add-on, the success of finish-to-end sequence-to-sequence technology in machine translation makes finish-to-end dialog systems possible. Facebook researchers proposed a task-oriented dialog system based on memory networks[four], presenting a new manner forward in the research of the end-to-end task-oriented dialog systems in 3rd-generation dialog systems. In general, third-generation dialog systems are better than 2nd-generation dialog systems, but a large amount of tagged data is required for constructive preparation. Therefore, improving the cross-domain migration and scalability of the model has get an important area of research.

Common dialog systems are divided into the post-obit three types:

Chat-, task-, and Q&A-oriented. In a chat-oriented dialog, the organisation generates interesting and informative natural responses to allow human-automobile dialog to proceed[7].

In a Q&A-oriented dialog, the arrangement analyzes each question and finds a correct respond from its libraries[eight]. A task-oriented dialog (hereinafter referred to as a task dialog) is a task-driven multi-round dialog. The auto determines the user'due south requirements through agreement, active enquiry, and description, makes queries by calling an Application Programming Interface (API), and returns the correct results. Generally, a task dialog is a sequence conclusion-making process. During the dialog, the machine updates and maintains the internal dialog state past understanding user statements and so selects the optimal activeness based on the current dialog country, such equally determining the requirement, querying restrictions, and providing results.

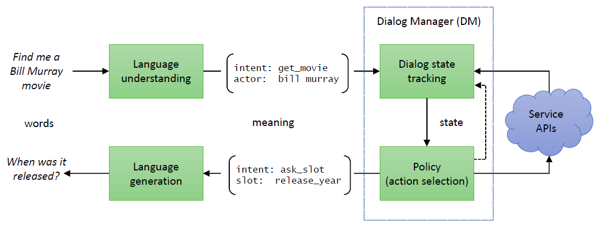

Task-oriented dialog systems are divided by architecture into two categories. Ane type is a pipeline organization that has a modular structure[5], equally shown in Figure 1. Information technology consists of four key modules:

- Tongue Understanding (NLU): Identifies and parses a user's text input to obtain semantic tags that tin exist understood past computers, such as slot-values and intentions.

- Dialog State Tracking (DST): Maintains the current dialog state based on the dialog history. The dialog country is the cumulative significant of the dialog history, which is generally expressed as slot-value pairs.

- Dialog Policy: Outputs the next organisation action based on the current dialog land. The DST module and the dialog policy module are collectively referred to equally the dialog manager (DM).

- Natural Linguistic communication Generation (NLG): Converts system actions to tongue output.

This modular system structure is highly interpretable, like shooting fish in a barrel to implement, and applied in most applied task-oriented dialog systems in the industry. However, this structure is non flexible enough. The modules are contained of each other and difficult to optimize together. This makes information technology difficult to adjust to changing application scenarios. Additionally, due to the accumulation of errors betwixt modules, the upgrade of a single module may require the adjustment of the whole system.

Effigy i. Modular construction of a job-oriented dialog system[41]

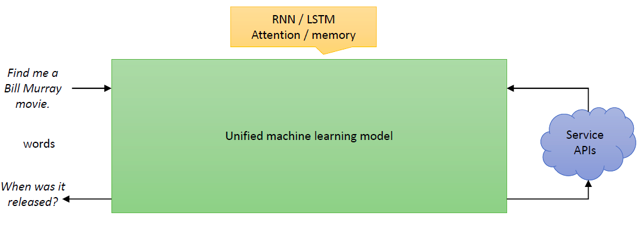

Another implementation of a chore-oriented dialog system is an end-to-end organization, which has been a popular field of academic enquiry in recent years911. This type of structure trains an overall mapping human relationship from the natural language input on the user side to the natural language output on the machine side. It is highly flexible and scalable, reducing labor costs for design and removing the isolation between modules. However, the end-to-end model places high requirements on the quantity and quality of data and does not provide clear modeling for processes such equally slot filling and API calling. This model is still being explored and is every bit yet rarely applied in the industry.

Figure ii. Finish-to-stop structure of a job-oriented dialog system[41]

With higher requirements on product feel, actual dialog scenarios become more complex, and DM needs to be farther improved. Traditional DM is usually built in a clear dialog script system (searching for matching answers, querying the user intent, and and so ending the dialog) with pre-defined organization action infinite, user intent space, and dialog trunk. However, due to unpredictable user behaviors, traditional dialog systems are less responsive and have a greater difficulty dealing with undefined situations. In add-on, many actual scenarios crave cold offset without sufficient tagged dialog information, resulting in high information cleansing and tagging costs. DM based on deep reinforcement learning requires a big amount of information for model training. According to the experiments in many academic papers, hundreds of complete sessions are required to railroad train a dialog model, which hinders the rapid evolution and iteration of dialog systems.

To solve the limitations of traditional DM, researchers in academic and industry circles accept begun to focus on how to strengthen the usability of DM. Specifically, they are working to address the following shortcomings in DM:

- Poor scalability

- Insufficient tagged information

- Low training efficiency

I will introduce the latest enquiry results in terms of the preceding aspects.

Cutting-Edge Research on Dialog Managing director

Shortcoming i: Poor Scalability

Every bit mentioned above, DM consists of the DST and dialog policy modules. The most representative traditional DST is the neural conventionalities tracker (NBT) proposed by scholars from Cambridge University in 2017[12]. NBT uses neural networks to track the state of complex dialogs in a unmarried domain. Past using representation learning, NBT encodes organisation deportment in the previous round, user statements in the current round, and candidate slot-value pairs to summate semantic similarity in a high dimensional space and detect the slot value output by the user in the current round. Therefore, NBT can identify slot values that are not in the training set merely semantically similar to those in the ready by using the word vector expression of the slot-value pair. This avoids the need to create a semantic dictionary. As such, the slot values can be extended. Later, Cambridge scholars farther improved NBT13 past irresolute the input slot-value pair to the domain-slot-value triple. The recognition results of each round are accumulated using model learning instead of manual rules. All data is trained by the same model. Knowledge is shared amid different domains, leaving the total number of parameters unchanged as the number of domains increases. Among traditional dialog policy research, the most representative is the ACER-based policy optimization proposed by Cambridge scholars6.

By applying the experience replay technique, the authors tried both the trust region actor-critic model and the episodic natural actor-critic model. The results proved that the deep AC-based reinforcement learning algorithms were the best in sample utilization efficiency, algorithm convergence, and dialog success charge per unit.

However, traditional DM still needs to be improved in terms of scalability, specifically in the following three respects:

- How to deal with irresolute user intents.

- How to bargain with changing slots and slot values.

- How to deal with irresolute system actions.

Changing User Intents

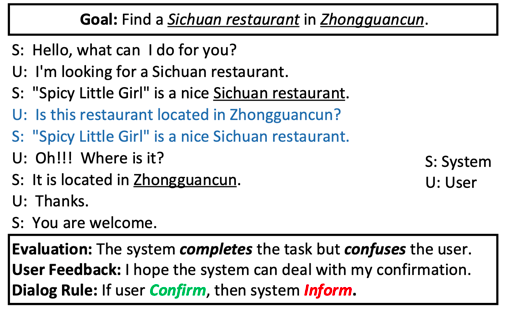

If a organisation does not take the user intent into account, it will ofttimes provide nonsensical answers. Equally shown in Figure three, the user's "ostend" intent is non considered. A new dialog script must exist added to help the system deal with this trouble.

Figure 3. Case of a dialog with new intent[xv]

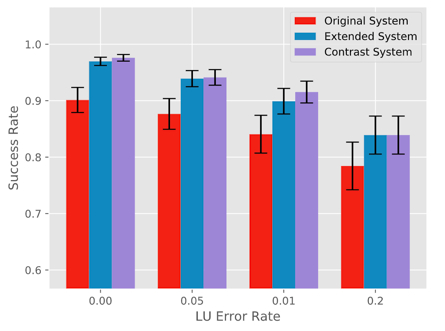

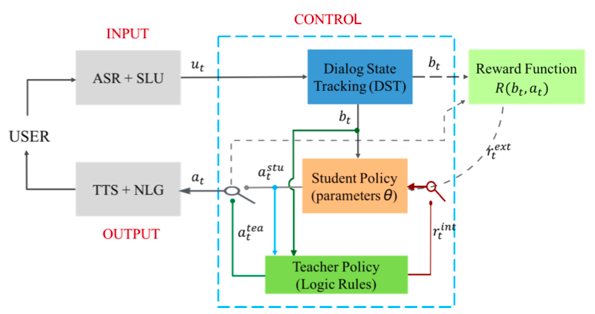

The traditional model outputs a fixed one-hot vector of the quondam intent category. Once a new user intent not in the training prepare appears, vectors need to be changed to include the new intent category, and the new model needs to be retrained. This makes the model less maintainable and scalable. One newspaper[15] proposes a teacher-student learning framework to solve this trouble. In the instructor-educatee training compages, the old model and logical rules for new user intents are used equally the teacher, and the new model as a pupil. This compages uses knowledge distillation technology. Specifically, for the old intent set, the probability output of the old model directly guides the training of the new model. For the new intent, the logical rules are used as new tagged data to train the new model. In this way, the new model no longer needs to collaborate with the environment for re-preparation. The newspaper presented the results of an experiment performed on the DSTC2 dataset. The ostend intent is deliberately removed and then added every bit a new intent to the dialog trunk to verify whether the new model is adaptable. Figure iv shows the experiment result. The new model (Extended System), the model containing all intents (Contrast System), and the old model are compared. The result shows that the new model achieves satisfactory success rates in extended new intent identification at unlike racket levels.

Figure 4. Comparison of diverse models at different racket levels

Of form, systems with this architecture need to be farther trained. CDSSM[16], a proposed semantic similarity matching model, tin can identify extended user intents without tagged information and model re-preparation. Based on the natural description of user intents in the training gear up, CDSSM straight learns an intent embedding encoder and embeds the clarification of whatever intent into a high dimensional semantic space. In this way, the model directly generates corresponding intent embedding based on the natural description of the new intent and and then identifies the intent. Many models that amend scalability mentioned below are designed with similar ideas. Tags are moved from the output end of the model to the input end, and neural networks are used to perform semantic encoding on tags (tag names or natural descriptions of the tags) to obtain certain semantic vectors and then match their semantic similarity.

A separate paper[43] provides another idea. Through man-machine collaboration, manual customer services are used to deal with user intents not in the preparation set later on the organisation is launched. This model uses an boosted neural parser to determine whether manual client service is required based on the dialog country vector extracted from the current model. If it is, the model distributes the current dialog to online customer service. If not, the model makes a prediction. The parser obtained through data learning tin can decide whether the current dialog contains a new intent, and responses from customer service are regarded as correct by default. This human-machine collaboration mechanism finer deals with user intents non found in the preparation gear up during online testing and significantly improves the accuracy of the dialog.

Changing Slots and Slot Values

In dialog land tracking involving multiple or complex domains, dealing with changing slots and slot values has ever been a claiming. Some slots have non-enumerative slot values, for example, the time, location, and user name. Their slot value sets, such as flights or movie theatre schedules, change dynamically. In traditional DST, the slot and slot value set remain unchanged by default, which profoundly reduces the system scalability.

Google researchers[17] proposed a candidate set for slots with non-enumerative slot values. A candidate set is maintained for each slot. The candidate set contains a maximum of thou possible slot values in the dialog and assigns a score to each slot value to indicate the user's preference for the slot value in the electric current dialog. The organisation uses a ii-fashion RNN model to find the value of a slot in the current user statement and then score and re-rank it with existing slot values in the candidate ready. In this style, the DST of each circular only needs to make a judgment on a limited slot value prepare, assuasive us to track non-enumerative slot values. To track slot values not in the fix, we tin employ a sequence tagging model[eighteen] or a semantic similarity matching model such as the neural belief tracker[12].

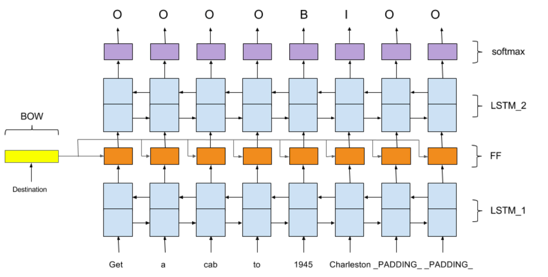

The preceding are solutions for non-fixed slot values, but what about changing slots in the dialog body? In one paper[xix], a slot description encoder is used to encode the tongue description of existing and new slots. The obtained semantic vectors representing the slot are sent with user statements as inputs to the Bi-LSTM model, and the identified slot values are output as sequence tags, as shown in Figure 5. The newspaper makes an acceptable assumption that the natural linguistic communication description of any slot is easy to obtain. Therefore, a concept tagger applicable to multiple domains is designed, and the slot description encoder is simply implemented by the sum of uncomplicated discussion vectors. Experiments show that this model can quickly adapt to new slots. Compared with the traditional method, this method greatly improves scalability.

Figure five. Concept tagger structure

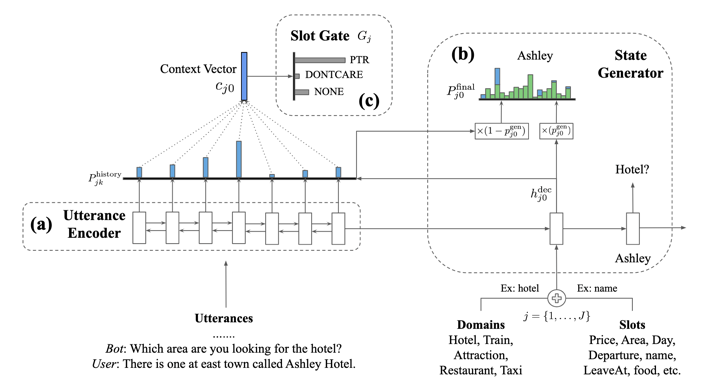

With the evolution of sequence-to-sequence technology in contempo years, many researchers are looking at ways to use the end-to-terminate neural network model to generate the DST results as a sequence. Common techniques such as attention mechanisms and copy mechanisms are used to ameliorate the generation effect. In the famous MultiWOZ dataset for multi-domain dialogs, the squad led by Professor Pascale Fung from Hong Kong University of Scientific discipline and Technology used the copy network to significantly improve the recognition accurateness of non-enumerative slot values[20]. Figure six shows the TRADE model proposed by the squad. Each time the slot value is detected, the model performs semantic encoding for different combinations of domains and slots and uses the result equally the initial position input of the RNN decoder. The decoder straight generates the slot value through the copy network. In this style, both not-enumerative slot values and irresolute slot values can be generated by the same model. Therefore, slot values tin can be shared between domains, allowing the model to be widely used.

Figure 6. TRADE model framework

Contempo research tends to view multi-domain DST as a machine reading and understanding job and transform generative models such equally TRADE into discriminative models45. Non-enumerative slot values are tracked by a machine reading and understanding task like Squad[46], in which the text span in the dialog history and questions is used as the slot value. Enumerative slot values are tracked by a multi-choice machine reading and understanding chore, in which the correct value is selected from the candidate values as the predicted slot value. By combining deep context words such equally ELMO and BERT, these new models obtain the optimal results from the MultiWOZ dataset.

Changing System Deportment

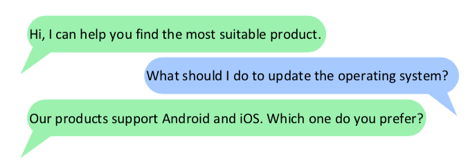

The last cistron affecting scalability is the difficulty of pre-defining the system action space. As shown in Figure vii, when designing an electronic product recommendation system, you may ignore questions like how to upgrade the production operating system, but you cannot stop users from request questions the system cannot answer. If the arrangement action space is pre-divers, irrelevant answers may be provided to questions that have not been defined, profoundly compromising the user feel.

Effigy seven. Example of a dialog where the dialog system encounters an undefined arrangement action[22]

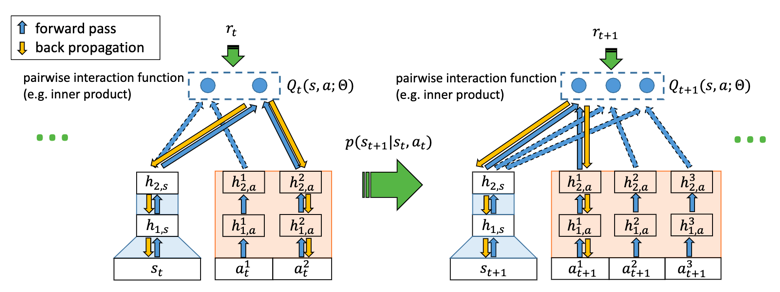

In this case, nosotros need to design a dialog policy network that helps the system rapidly expand its actions. The get-go try to exercise this was made past Microsoft[21], who modifies the classic DQN structure to enable reinforcement learning in an unrestricted activeness infinite. The dialog job in this newspaper is a text game mission task. Each round of activeness is a single sentence, with an uncertain number of actions. The story varies with the action. The author proposed a new model, Deep Reinforcement Relevance Network (DRRN), which matches the current dialog land with optional system actions by semantic similarity matching to obtain the Q function. Specifically, in a circular of dialog, each activity text of an uncertain length is encoded by a neural network to obtain a organization action vector with a fixed length. The story groundwork text is encoded past another neural network to obtain a dialog state vector with a fixed length. The two vectors are used to generate the concluding Q value through an interactive function, such every bit dot product. Figure 8 shows the structure of the model designed in the paper. Experiments show that DRRN outperforms traditional DQN (using the padding technique) in the text games "Saving John" and "Machine of Decease".

Figure 8. DRRN model, in which round t has 2 candidate actions, and round t+1 has three candidate actions

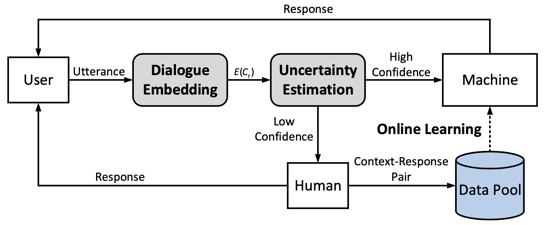

In another paper[22], the writer wanted to solve this problem from the perspective of the entire dialogue system and proposed the Incremental Dialogue System (IDS), as shown in Figure 9. IDS first encodes the dialog history to obtain the context vector through the Dialog Embedding module and then uses a VAE-based Dubiousness Estimation module to evaluate, based on the context vector, the confidence level used to signal whether the current system can give correct answers. Similar to active learning, if the confidence level is higher than the threshold, DM scores all bachelor actions and then predicts the probability distribution based on the softmax function. If the confidence level is lower than the threshold, the tagger is requested to tag the response of the current round (select the correct response or create a new response). The new data obtained in this fashion is added to the data puddle to update the model online. With this human-teaching method, IDS not only supports learning in an unrestricted action space, only also apace collects high-quality data, which is quite suitable for actual production.

Figure 9. The Overall framework of IDS

Shortcoming ii: Insufficient Tagged Data

The extensive application of dialog systems results in diversified data requirements. To train a job-oriented dialog organisation, every bit much domain-specific data every bit possible is needed, but quality tagged information is costly. Scholars take tried to solve this trouble in three ways: (one) using machines to tag information to reduce the tagging costs; (2) mining the dialog structure to utilize non-tagged information efficiently; and (iii) optimizing the data collection policy to efficiently obtain high-quality data.

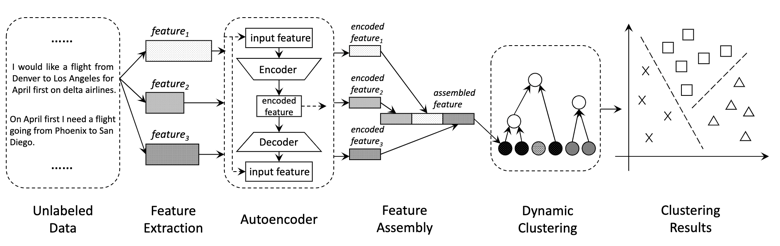

Automatic Tagging

To accost the cost and inefficiency of transmission tagging, scholars promise to utilise supervised learning and unsupervised learning to permit machines to help in manual tagging. One paper[23] proposed the auto-dielabel architecture, which automatically groups intents and slots in the dialog data by using the unsupervised learning method of hierarchical clustering to automatically tag the dialog data (the specific tag of the category needs to be manually determined). This method is based on the assumption that expressions of the same intent may share like background features. Initial features extracted by the model include give-and-take vectors, part-of-oral communication (POS) tags, noun word clusters, and Latent Dirichlet allocation (LDA). All features are encoded by the auto-encoder into vectors of the same dimension and spliced. So, the inter-class distance calculated by the radial bias function (RBF) is used for dynamic hierarchical clustering. Classes that are closest to each other are merged automatically until the inter-course distance between the classes is greater than the threshold. Figure 10 shows the model framework.

Figure 10. Auto-dialabel model

In another paper[24], supervised clustering is used to implement machine tagging. The author views each dialog data record as a graph node and sees the clustering procedure every bit the process of identifying the minimum spanning forest. The model uses a back up vector auto (SVM) to railroad train the distance scoring model between nodes in the Q&A dataset through supervised learning. Information technology and so uses the structured model and the minimum subtree spanning algorithm to derive the grade data respective to the dialog data as the hidden variable. It generates the best cluster construction to stand for the user intent type.

Dialog Construction Mining

Due to the lack of high-quality tagged data for training dialog systems, finding ways to fully mine implicit dialog structures or information in the untagged dialog data has become a popular surface area of research. Implicit dialog structures or information contribute to the design of dialog policies and the training of dialog models to some extent.

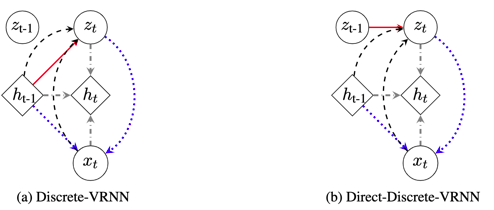

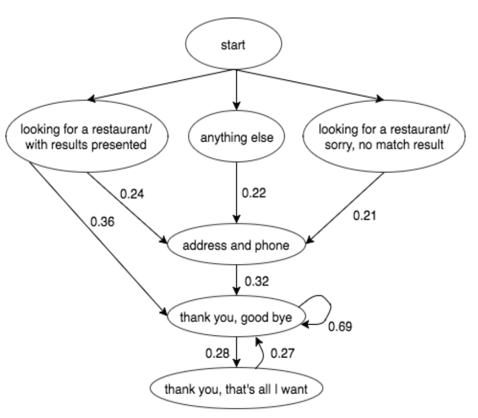

One paper[25] proposed to utilize unsupervised learning in a variational RNN (VRNN) to automatically learn hidden structures in dialog information. The author provides two models that can obtain the dynamic information in a dialog: Detached-VRNN (D-VRNN) and Direct-Detached-VRNN (DD-VRNN). Equally shown in Figure eleven, x_t indicates the t-th round of dialog, h_t indicates the hidden variable of the dialog history, and z_t indicates the hidden variable (one-dimensional one-hot discrete variable) of the dialog construction. The difference between the two models is that for D-VRNN, the hidden variable z_t depends on h_(t-1), while for DD-VRNN, the hidden variable z_t depends on z_(t-1). Based on the maximum likelihood of the unabridged dialog, VRNN uses some common methods of VAE to estimate the distribution of a posteriori probabilities of the hidden variable z_t.

Effigy 11. D-VRNN and DD-VRNN

The experiments in the paper bear witness that VRNN is superior to the traditional HMM method. VRNN likewise adds the dialog structure data to the reward role, supporting faster convergence of the reinforcement learning model. Figure 12 shows the transition probability of the hidden variable z_t in restaurants mined past D-VRNN.

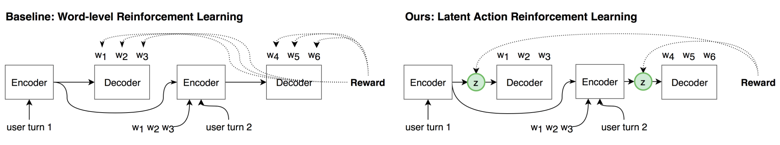

Figure 12. Dialog stream construction mined past D-VRNN from the dialog data related to restaurants

CMU scholars[26] also tried to utilize the VAE method to deduce system actions as hidden variables and directly use them for dialog policy selection. This can alleviate the problems caused past bereft predefined arrangement actions. As shown in Effigy 13, for simplicity, an terminate-to-stop dialog arrangement framework is used in the paper. The baseline model is an RL model at the give-and-take level (that is, a dialog activeness is a discussion in the vocabulary). The model uses an encoder to encode the dialog history and so uses a decoder to decode it and generate a response. The reward part directly compares the generated response argument with the existent response statement. Compared with the baseline model, the latent activity model adds a posterior probability inference betwixt the encoder and the decoder and uses discrete hidden variables to represent the dialog actions without any manual intervention. The experiment shows that the end-to-finish RL model based on latent actions is superior to the baseline model in terms of statement generation multifariousness and task completion rate.

Figure xiii. Baseline model and latent action model

Data Collection Policy

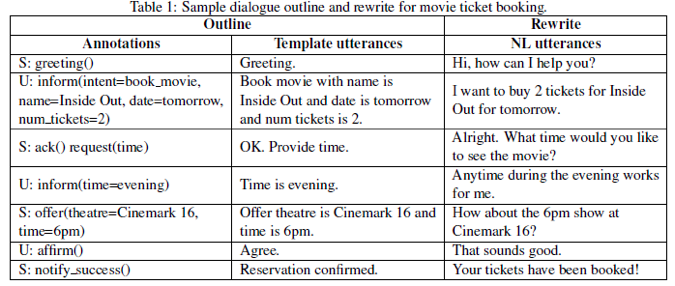

Recently, Google researchers proposed a method to quickly collect dialog data27: Offset, use ii rule-based simulators to interact to generate a dialog outline, which is a dialog flow framework represented past semantic tags. Then, catechumen the semantic tags into tongue dialogs based on templates. Finally, rewrite the natural statements by crowdsourcing to enrich the linguistic communication expressions of dialog data. This reverse data drove method features high collection efficiency and consummate and highly available data tags, reducing the cost and workload of data drove and processing.

Figure 14. Examples of dialog outline, template-based dialog generation, and crowdsourcing-based dialog rewrite

This method is a auto-to-auto (M2M) information drove policy, in which a wide range of semantic tags for dialog data are generated, and so crowdsourced to generate a large number of dialog utterances. Yet, the generated dialogs cannot cover all the possibilities in existent scenarios. In addition, the upshot depends on the simulator.

In relevant academic circles, two other methods are commonly used to collect data from dialog systems: human being-to-machine (H2M) and human-to-human being (H2H). The H2H method requires a multi-round dialog between the user, played by a crowdsourced staff fellow member, and the customer service personnel, played past some other crowdsourced staff fellow member. The user proposes requirements based on specified dialog targets such equally buying an airplane ticket, and the client service staff annotates the dialog tags and makes responses. This fashion is called the Wizard-of-Oz framework. Many dialog datasets, such as WOZ[v] and MultiWOZ [28], are collected in this way. The H2H method helps us get dialog data that is the well-nigh like to that of bodily service scenarios. However, information technology is costly to blueprint unlike interactive interfaces for different tasks and to clean upwardly incorrect annotations. The H2M information drove policy allows users and trained machines to interact with each other. This way, we can directly collect data online and continuously better the DM model through RL. The famous DSTC2&3 dataset was collected in this way. The performance of the H2M method depends largely on the initial performance of the DM model. In addition, the data collected online has a great deal of racket, which results in high clean-up costs and affects the model optimization efficiency.

Shortcoming iii: Low Training Efficiency

With the successful application of deep RL in the Go game, this method is likewise widely used in the chore dialog systems. For example, the ACER dialog direction method in one paper[vi] combines model-free deep RL with other techniques such every bit Feel Replay, belief domain constraints, and pre-training. This greatly improves the training efficiency and stability of RL algorithms in task dialog systems.

However, only applying the RL algorithm cannot come across the actual requirements of dialog systems. One reason is that dialogs lack articulate rules, reward functions, simple and articulate action spaces, and perfect environment simulators that can generate hundreds of millions of quality interactive data records. Dialog tasks include irresolute slot values, actions, and intents, which significantly increases the activeness infinite of the dialog system and makes it difficult to define. When traditional flat RL methods are used, the curse of dimensionality may occur due to 1-hot encoding of all system actions. Therefore, these methods are no longer suitable for treatment complex dialogs with large action spaces. For this reason, scholars take tried many other methods, including model-gratis RL, model-based RL, and human-in-the-loop.

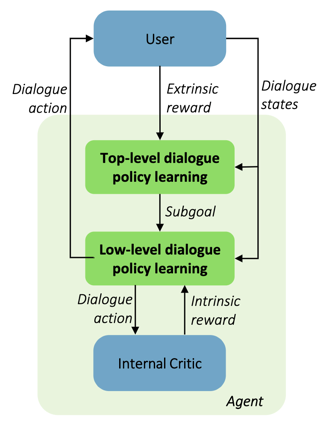

Model-Free RL - HRL

Hierarchical Reinforcement Learning (HRL) divides a complex task into multiple sub-tasks to avert the curse of dimensionality in traditional flat RL methods. In one paper[29], HRL was practical to chore dialog systems for the first fourth dimension. The authors divided a complex dialog task into multiple sub-tasks by time. For example, a complex travel chore tin be divided into sub-tasks, such equally booking tickets, booking hotels, and renting cars. Accordingly, they designed a dialog policy network of ii layers. Ane layer selects and arranges all sub-tasks, and the other layer executes specific sub-tasks.

The DM model they proposed consists of 2 parts, equally shown in Effigy fifteen:

- Top-level policy: Selects a sub-task based on the dialog land.

- Depression-level policy: Completes a specific dialog action in a sub-task.

- The global dialog state tracker records the overall dialog land. Later the entire dialog job is completed, the top-level policy receives an external reward.

The model also has an internal critic module to judge the possibility of completing the sub-tasks (the degree of slot filling for sub-tasks) based on the dialog state. The low-level policy receives an intrinsic advantage from the internal critic module based on the degree of completion of the sub-task.

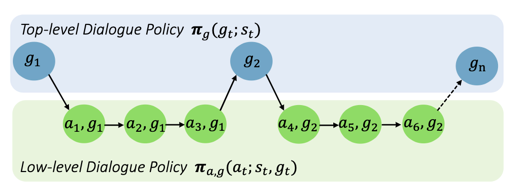

Figure 15. The HRL framework of a task-oriented dialog system

For complex dialogs, a bones system action is selected at each step of traditional RL methods, such as querying the slot value or confirming constraints. In the HRL mode, a ready of basic actions is selected based on the elevation-level policy, and then a basic action is selected from the current prepare based on the depression-level policy, every bit shown in Figure xvi. This hierarchical division of activity spaces covers the time sequence constraints between different sub-tasks, which facilitates the completion of composite tasks. In addition, the intrinsic reward effectively relieves the problem of sparse rewards, accelerating RL preparation, preventing frequent switching of the dialog between different sub-tasks, and improving the accuracy of activeness prediction. Of course, the hierarchical design of deportment requires practiced knowledge, and the types of sub-tasks demand to be adamant by experts. Recently, tools that tin can automatically discover dialog sub-tasks take appeared30. By using unsupervised learning methods, these tools automatically split the dialog state sequence of the whole dialog history, without the need to manually build a dialog sub-task structure.

Figure 16. Policy selection process of HRL

Model-complimentary RL - FRL

Feudal Reinforcement Learning (FRL) is a suitable solution to large dimension problems. HRL divides a dialog policy into sub-policies based on different chore stages in the time dimension, which reduces the complication of policy learning. FRL divides a policy in the infinite dimension to restrict the action range of each sub-policy, which reduces the complexity of sub-policies. FRL does not dissever a chore into sub-tasks. Instead, it uses the abstract functions of the state space to extract useful features from dialog states. Such abstraction allows FRL to be applied and migrated between different domains, achieving high scalability.

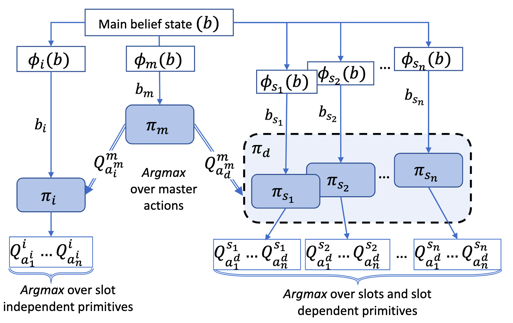

Cambridge scholars practical FRL[32] to task dialog systems for the first time to divide the action space by its relevance to the slots. With this done, merely the natural structure of the action space is used, and additional expert cognition is not required. They put frontward a feudal policy construction shown in Figure 17. The controlling procedure for this structure is divided into two steps:

- Determine whether the side by side action requires slots as parameters.

- Select the low-level policy and next action for the corresponding slot based on the conclusion of the beginning footstep.

Figure 17. Application of FRL in a task-oriented dialog organisation

In general, both HRL and FRL split the loftier-dimensional circuitous action infinite in different ways to address the low training efficiency of traditional RL methods due to large action space dimensions. HRL divides tasks properly in line with human understanding. Yet, skillful noesis is required to divide a job into sub-tasks. FRL divides complex tasks based on the logical structure of the activeness and does not consider common constraints between sub-tasks.

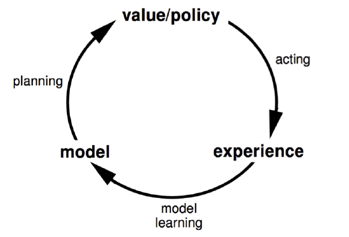

Model-Based RL

The preceding RL methods are model-costless. With these methods, a large amount of weakly supervised data is obtained through trial and error interactions with the surround, and so a value network or policy network is trained accordingly. The process is independent of the environment. In that location is as well model-based RL, as shown in Figure 18. Model-based RL directly models and interacts with the environment to learn a probability transition office of state and advantage, namely, an environment model. And so, the system interacts with the surroundings model to generate more training information. Therefore, model-based RL is more efficient than model-gratis RL, especially when it is costly to collaborate with the environs. Yet, the resulting performance depends on the quality of environment modeling.

Figure 18. Model-based RL procedure

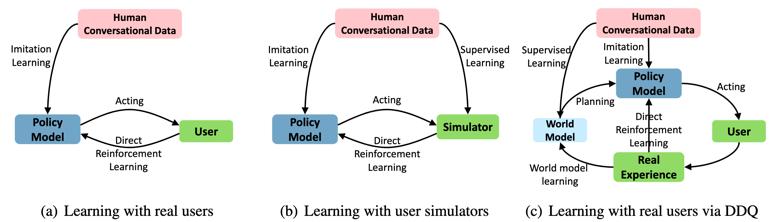

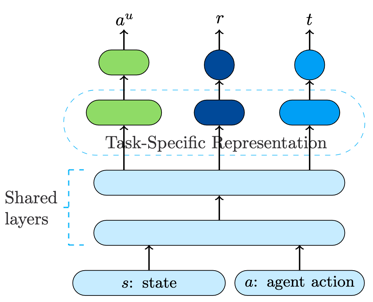

Using model-based RL to improve training efficiency is currently an active field of research. Microsoft start applied the archetype Deep Dyna-Q (DDQ) algorithm in dialogs[33], as shown past the effigy (c) in Figure xix. Earlier DDQ training starts, we use a small corporeality of existing dialog information to pre-train the policy model and the world model. Then, we train DDQ past repeating the following steps:

- Direct RL: Interact with real users online, update policy models, and store dialog data.

- World model grooming: Update the world model based on collected existent dialog data.

- Planning: Employ the dialog information obtained from interaction with the world model to railroad train the policy model.

The world model (as shown in Figure 20) is a neural network that models the probability of environment land transition and rewards. The inputs are the current dialog state and system action. The outputs are the side by side user action, surround rewards, and dialog termination variables. The earth model reduces the human-machine interaction data required by DDQ for online RL (as shown in figure (a) of Effigy 19) and avoids ineffective interactions with user simulators (as shown in figure (b) of Figure nineteen).

Effigy 19. Three RL architectures

Figure twenty. Structure of the earth model

Like to the user simulator in the dialog field, the world model can simulate real user deportment and interact with the system's DM. However, the user simulator is substantially an external environment and is used to simulate real users, while the world model is an internal model of the system.

Microsoft researchers have made improvements based on DDQ. To improve the actuality of the dialog data generated by the world model, they proposed[34] to improve the quality of the generated dialog data through adversarial training. Considering when to employ the information generated through interaction with the real environment and when to use data generated through interaction with the earth model, they discussed feasible solutions in a paper[35]. They also discussed a unified dialog framework to include interaction with real users in another newspaper[36]. This human-didactics concept has attracted attending in the industry equally it can assistance in the building of DMs. This volition be further explained in the following sections.

Human-in-the-Loop

We hope to make full employ of human knowledge and feel to generate high-quality information and improve the efficiency of model training. Homo-in-the-loop RL[37] is a method to innovate homo beings into robot training. Through designed homo-machine interaction methods, humans tin can efficiently guide the training of RL models. To further meliorate the preparation efficiency of the task dialog systems, researchers are working to pattern an constructive human-in-the-loop method based on the dialog features.

Figure 21. Composite learning combining supervised pre-grooming, imitation learning, and online RL

Google researchers proposed a blended learning method combining human teaching and RL37, which adds a human teaching phase between supervised pre-training and online RL, allowing humans to tag data to avert the covariate shift acquired by supervised pre-grooming[42]. Amazon researchers also proposed a similar human teaching framework[37]: In each round of dialog, the system recommends four responses to the customer service expert. The customer service expert determines whether to select i of these responses or create a new response. Finally, the customer service expert sends the selected or created response to the user. With this method, developers can quickly update the capabilities of the dialog organization.

In the preceding method, the organisation passively receives the data tagged by humans. However, a practiced organisation should actively enquire questions and seek help from humans. I paper[40] introduced the companion learning architecture (as shown in Figure 22), which adds the role of a teacher (human being) to the traditional RL framework. The instructor can correct the responses of the dialog system (the student, represented by the switch on the left side of the figure) and evaluate the student'due south response in the class of intrinsic reward (the switch on the correct side of the figure). For the implementation of active learning, the authors put forward the concept of dialog decision certainty. The student policy network is sampled multiple times through dropout to obtain the estimated approximate maximum probability of the desired action. Then the moving average of several dialog rounds is calculated through the maximum probability and used as the conclusion certainty of the student policy network. If the calculated certainty is lower than the target value, the organisation determines whether a teacher is required to correct errors and provide advantage functions based on the deviation between the calculated decision certainty and the target value. If the calculated certainty is higher than the target value, the organisation stops learning from the teacher and makes judgments on its ain.

Effigy 22. The teacher corrects the student'southward response (on the left) or evaluates the educatee'south response (on the right).

The key to active learning is to gauge the certainty of the dialog system regarding its own decisions. In addition to dropping out policy networks, other methods include using subconscious variables as condition variables to calculate the Jensen-Shannon deviation of policy networks[22] and making judgments based on the dialog success charge per unit of the current system[36].

Dialog Management Framework of the Intelligent Robot Conversational AI Team

To ensure stability and interpretability, the manufacture primarily uses rule-based DM models. The Intelligent Robot Conversational AI Squad at Alibaba's DAMO Academy began to explore DM models last twelvemonth. When building a existent dialog organization, we demand to solve two problems: (1) how to obtain a large corporeality of dialog information in a specific scenario and (2) how to use algorithms to maximize the value of data.

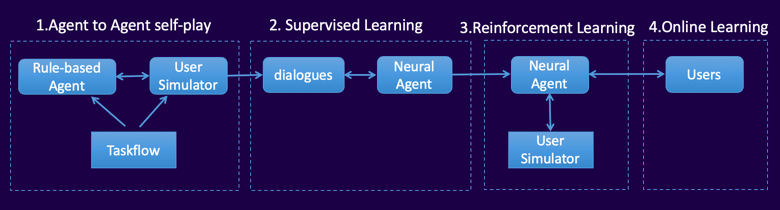

Currently, nosotros program to complete the model framework design in four steps, every bit shown in Figure 23.

Effigy 23. Four steps of DM model design

- Pace i: First, use the dialog studio independently developed by the Intelligent Robot Conversational AI squad to quickly build a dialog engine called TaskFlow based on rule-based dialog flows and build a user simulator with similar dialog flows. Then, have the user simulator and TaskFlow continuously collaborate with each other to generate a large amount of dialog data.

- Step ii: Train a neural network through supervised learning to build a preliminary DM model that has capabilities basically equivalent to a dominion-based dialog engine. The model tin can be expanded past combining semantic similarity matching and end-to-cease generation. Dialog tasks with a large action infinite are divided using the HRL method.

- Step 3: In the development phase, brand the arrangement collaborate with an improved user simulator or AI trainers and continuously heighten the system dialog capability based on off-policy ACER RL algorithms.

- Pace 4: After the human-machine interaction experience is verified, launch the system and introduce human roles to collect real user interaction data. In addition, use some UI designs to easily introduce user feedback to continuously update and enhance the model. The obtained human-car dialog information will be further analyzed and mined for customer insight.

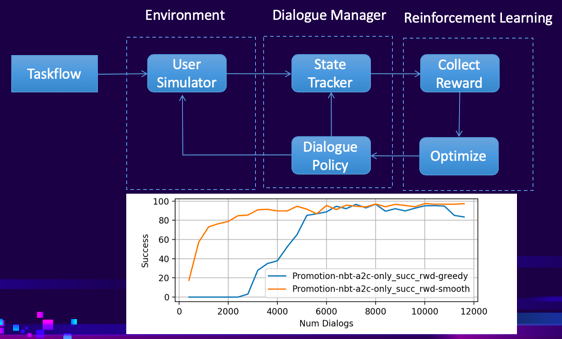

At present, the RL-based DM model we developed can complete 80% of the dialog with the user simulator for moderately complex dialog tasks, such as booking a meeting room, as shown in Figure 24.

Figure 24. Framework and evaluation indicators of the DM model developed by the Intelligent Robot Conversational AI team

Summary

This article provides a detailed introduction of the latest enquiry on DM models, focusing on three shortcomings of traditional DM models:

- Poor scalability

- Bereft tagged data

- Low grooming efficiency

To accost scalability, common methods for processing changes in user intents, dialog bodies, and the arrangement activeness space include semantic similarity matching, knowledge distillation, and sequence generation. To address bereft tagged data, methods include automated machine tagging, effective dialog structure mining, and efficient information collection policies. To address the depression training efficiency of traditional DM models, methods such every bit HRL and FRL are used to divide action spaces into different layers. Model-based RL methods are also used to model the environment and improve training efficiency. Introducing human-in-the-loop into the dialog system training framework is besides a current focus of research. Finally, I discussed the current progress of the DM model adult past the Intelligent Robot Conversational AI team of Alibaba's DAMO Academy. I promise this summary tin provide some new insights to support your own research on DM.

References

[one].TURING A K. I.—COMPUTING MACHINERY AND INTELLIGENCE[J]. Listen, 1950, 59(236): 433-460.

[2].Weizenbaum J. ELIZA---a calculator program for the written report of natural language communication between man and machine[J]. Communications of the ACM, 1966, 9(1): 36-45.

[3].Young S, Gašić M, Thomson B, et al. Pomdp-based statistical spoken dialog systems: A review[J]. Proceedings of the IEEE, 2013, 101(five): 1160-1179.

[iv].Bordes A, Boureau Y L, Weston J. Learning end-to-end goal-oriented dialog[J]. arXiv preprint arXiv:1605.07683, 2016.

[5].Wen T H, Vandyke D, Mrksic N, et al. A network-based cease-to-end trainable task-oriented dialogue organisation[J]. arXiv preprint arXiv:1604.04562, 2016.

[6].Su P H, Budzianowski P, Ultes South, et al. Sample-efficient actor-critic reinforcement learning with supervised data for dialogue management[J]. arXiv preprint arXiv:1707.00130, 2017.

[vii]. Serban I V, Sordoni A, Lowe R, et al. A hierarchical latent variable encoder-decoder model for generating dialogues[C]//Thirty-First AAAI Conference on Bogus Intelligence. 2017.

[8]. Berant J, Chou A, Frostig R, et al. Semantic parsing on freebase from question-respond pairs[C]//Proceedings of the 2013 Conference on Empirical Methods in Natural language Processing. 2013: 1533-1544.

[9]. Dhingra B, Li L, Li X, et al. Towards end-to-end reinforcement learning of dialogue agents for information admission[J]. arXiv preprint arXiv:1609.00777, 2016.

[10]. Lei W, Jin 10, Kan Yard Y, et al. Sequicity: Simplifying task-oriented dialogue systems with single sequence-to-sequence architectures[C]//Proceedings of the 56th Annual Coming together of the Association for Computational Linguistics (Book one: Long Papers). 2018: 1437-1447.

[11]. Madotto A, Wu C Due south, Fung P. Mem2seq: Effectively incorporating knowledge bases into end-to-terminate job-oriented dialog systems[J]. arXiv preprint arXiv:1804.08217, 2018.

[12]. Mrkšić N, Séaghdha D O, Wen T H, et al. Neural belief tracker: Information-driven dialogue state tracking[J]. arXiv preprint arXiv:1606.03777, 2016.

[thirteen]. ¬Ramadan O, Budzianowski P, Gašić M. Large-calibration multi-domain belief tracking with knowledge sharing[J]. arXiv preprint arXiv:1807.06517, 2018.

[14]. Weisz Chiliad, Budzianowski P, Su P H, et al. Sample efficient deep reinforcement learning for dialogue systems with big action spaces[J]. IEEE/ACM Transactions on Audio, Speech and Linguistic communication Processing (TASLP), 2018, 26(eleven): 2083-2097.

[15]. Wang Due west, Zhang J, Zhang H, et al. A Instructor-Student Framework for Maintainable Dialog Managing director[C]//Proceedings of the 2018 Conference on Empirical Methods in Tongue Processing. 2018: 3803-3812.

[16]. Yun-Nung Chen, Dilek Hakkani-Tur, and Xiaodong He, "Zero-Shot Learning of Intent Embeddings for Expansion by Convolutional Deep Structured Semantic Models," in Proceedings of The 41st IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP 2016), Shanghai, China, March 20-25, 2016. IEEE.

[17]. Rastogi A, Hakkani-Tür D, Heck L. Scalable multi-domain dialogue state tracking[C]//2017 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU). IEEE, 2017: 561-568.

[eighteen]. Mesnil G, He Ten, Deng L, et al. Investigation of recurrent-neural-network architectures and learning methods for spoken language agreement[C]//Interspeech. 2013: 3771-3775.

[nineteen]. Bapna A, Tur Yard, Hakkani-Tur D, et al. Towards zero-shot frame semantic parsing for domain scaling[J]. arXiv preprint arXiv:1707.02363, 2017.

[20]. Wu C S, Madotto A, Hosseini-Asl Eastward, et al. Transferable Multi-Domain State Generator for Task-Oriented Dialogue Systems[J]. arXiv preprint arXiv:1905.08743, 2019.

[21]. He J, Chen J, He X, et al. Deep reinforcement learning with a natural language action space[J]. arXiv preprint arXiv:1511.04636, 2015.

[22]. Wang Westward, Zhang J, Li Q, et al. Incremental Learning from Scratch for Job-Oriented Dialogue Systems[J].arXiv preprint arXiv:1906.04991, 2019.

[23]. Shi C, Chen Q, Sha L, et al.Auto-Dialabel: Labeling Dialogue Data with Unsupervised Learning[C]//Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing. 2018: 684-689.

[24]. Haponchyk I, Uva A, Yu S, et al. Supervised clustering of questions into intents for dialog organisation applications[C]//Proceedings of the 2018 Briefing on Empirical Methods in Natural Linguistic communication Processing. 2018: 2310-2321.

[25]. Shi West, Zhao T, Yu Z. Unsupervised Dialog Construction Learning[J]. arXiv preprint arXiv:1904.03736, 2019.

[26]. Zhao T, Xie One thousand, Eskenazi M. Rethinking action spaces for reinforcement learning in finish-to-end dialog agents with latent variable models[J]. arXiv preprint arXiv:1902.08858, 2019.

[27]. Shah P, Hakkani-Tur D, Liu B, et al. Bootstrapping a neural conversational agent with dialogue self-play, crowdsourcing and on-line reinforcement learning[C]//Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Book 3 (Manufacture Papers). 2018: 41-51.

[28]. Budzianowski P, Wen T H, Tseng B H, et al. Multiwoz-a large-scale multi-domain magician-of-oz dataset for task-oriented dialogue modelling[J]. arXiv preprint arXiv:1810.00278, 2018.

[29]. Peng B, Li X, Li Fifty, et al. Composite task-completion dialogue policy learning via hierarchical deep reinforcement learning[J]. arXiv preprint arXiv:1704.03084, 2017.

[thirty]. Kristianto G Y, Zhang H, Tong B, et al. Democratic Sub-domain Modeling for Dialogue Policy with Hierarchical Deep Reinforcement Learning[C]//Proceedings of the 2018 EMNLP Workshop SCAI: The second International Workshop on Search-Oriented Conversational AI. 2018: 9-16.

[31]. Tang D, Li Ten, Gao J, et al. Subgoal discovery for hierarchical dialogue policy learning[J]. arXiv preprint arXiv:1804.07855, 2018.

[32]. Casanueva I, Budzianowski P, Su P H, et al. Feudal reinforcement learning for dialogue management in big domains[J]. arXiv preprint arXiv:1803.03232, 2018.

[33]. Peng B, Li X, Gao J, et al. Deep dyna-q: Integrating planning for job-completion dialogue policy learning[J]. ACL 2018.

[34]. Su South Y, Li X, Gao J, et al. Discriminative deep dyna-q: Robust planning for dialogue policy learning.EMNLP, 2018.

[35]. Wu Y, Li X, Liu J, et al. Switch-based agile deep dyna-q: Efficient adaptive planning for task-completion dialogue policy learning.AAAI, 2019.

[36]. Zhang Z, Li X, Gao J, et al. Budgeted Policy Learning for Task-Oriented Dialogue Systems. ACL, 2019.[37]. Abel D, Salvatier J, Stuhlmüller A, et al. Amanuensis-doubter human-in-the-loop reinforcement learning[J]. arXiv preprint arXiv:1701.04079, 2017.

[38]. Liu B, Tur G, Hakkani-Tur D, et al. Dialogue learning with human being teaching and feedback in end-to-end trainable task-oriented dialogue systems[J]. arXiv preprint arXiv:1804.06512, 2018.

[39]. Lu Y, Srivastava M, Kramer J, et al. Goal-Oriented End-to-End Conversational Models with Profile Features in a Real-Globe Setting[C]//Proceedings of the 2019 Briefing of the Northward American Chapter of the Association for Computational Linguistics: Human being Linguistic communication Technologies, Volume two (Manufacture Papers). 2019: 48-55.

[40]. Chen L, Zhou Ten, Chang C, et al. Amanuensis-aware dropout dqn for safe and efficient on-line dialogue policy learning[C]//Proceedings of the 2017 Briefing on Empirical Methods in Natural Language Processing. 2017: 2454-2464.

[41]. Gao J, Galley M, Li L. Neural approaches to conversational AI[J]. Foundations and Trends® in Data Retrieval, 2019, xiii(ii-three): 127-298.

[42]. Ross Southward, Gordon G, Bagnell D. A reduction of imitation learning and structured prediction to no-regret online learning[C]//Proceedings of the fourteenth international conference on artificial intelligence and statistics. 2011: 627-635.

[43]. Rajendran J, Ganhotra J, Polymenakos L C. Learning End-to-End Goal-Oriented Dialog with Maximal User Job Success and Minimal Homo Agent Apply[J]. Transactions of the Association for Computational Linguistics, 2019, 7: 375-386.

[44]. Mrkšić North, Vulić I. Fully Statistical Neural Conventionalities Tracking[C]//Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers). 2018: 108-113.

[45]. Zhou L, Small M. Multi-domain Dialogue State Tracking as Dynamic Cognition Graph Enhanced Question Answering[J]. arXiv preprint arXiv:1911.06192, 2019.

[46]. Rajpurkar P, Jia R, Liang P. Know What You Don't Know: Unanswerable Questions for SQuAD[J]. arXiv preprint arXiv:1806.03822, 2018.

[47]. Zhang J Thousand, Hashimoto K, Wu C S, et al. Find or Classify? Dual Strategy for Slot-Value Predictions on Multi-Domain Dialog State Tracking[J]. arXiv preprint arXiv:1910.03544, 2019.

Are you eager to know the latest tech trends in Alibaba Deject? Hear it from our elevation experts in our newly launched serial, Tech Bear witness!

Disclaimer: The data presented in this blog is for reference only, intended to give readers a better agreement of the concepts in dialog management model research. Alibaba Cloud does not engage in dialog data collection without customers consent.

-

-

Alibaba Clouder

2,630 posts | 670 followers

Follow

You lot may as well like

Comments

-

-

Alibaba Clouder

2,630 posts | 670 followers

Follow

Related Products

-

Machine Learning Platform For AI

Machine Learning Platform For AI An end-to-stop platform that provides various machine learning algorithms to encounter your information mining and analysis requirements.

Learn More -

Epidemic Prediction Solution

Epidemic Prediction Solution This applied science tin be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More -

MaxCompute

MaxCompute Deport large-scale data warehousing with MaxCompute

Learn More than -

AI Acceleration Solution

AI Acceleration Solution Advance AI-driven business organisation and AI model training and inference with Alibaba Cloud GPU engineering science

Acquire More

Source: https://www.alibabacloud.com/blog/progress-in-dialog-management-model-research_596140

0 Response to "Pomdp-based Statistical Spoken Dialog Systems a Review Blog"

Post a Comment